Medical research fraud: who cares?

[Whistleblowing researchers] have sent their concerns about more than 750 papers to the journals that published them. But, all too often, either nothing seems to happen or investigations take years. Only 80 of the studies they have flagged have so far been retracted. Worse, many have been included in systematic reviews—the sort of research round-ups that inform clinical practice.

Millions of patients may, as a consequence, be receiving wrong treatments. One example concerns steroid injections given to women undergoing elective Caesarean sections to deliver their babies. These injections are intended to prevent breathing problems in newborns. There is a worry that they might cause damage to a baby’s brain, but the practice was supported by a review, published in 2018, by Cochrane, a charity for the promotion of evidence-based medicine. However, when Dr Mol and his colleagues looked at this review, they found it included three studies that they had noted as unreliable. A revised review, published in 2021, which excluded these three, found the benefits of the drugs for such cases to be uncertain.

Partly or entirely fabricated papers are being found in ever-larger numbers, thanks to sleuths like Dr Mol. Retraction Watch, an online database, lists nearly 19,000 papers on biomedical-science topics that have been retracted (see chart 1). In 2022 there were about 2,600 retractions in this area—more than twice the number in 2018. Some were the results of honest mistakes, but misconduct of one sort or another is involved in the vast majority of them (see chart 2). Yet journals can take years to retract, if they ever do so.

* Note others disagree with Deer’s argument in responses to my tweet.

Rhys Cauzzo’s mother on the robo-debt hearings

As each of the government witnesses was called, most taking their oath on the Bible, which in itself I found extraordinary, some would then go on to lie and spin as they had to me. Watching and listening to each of these witnesses, at times it was quite distressing for me to hear how callous and inhumane they were.

As more and more evidence came to hand it became clear to me that no one cared, they were only concerned with making themselves look good, making the budget look good prior to elections, having Coalition voters convinced this was a process that had been happening for years, that it was not illegal, that the vulnerable were the ones committing fraud rather than the government. Of course, through all this, they were boosting their own egos and self-importance.

Attending daily, the assumption I make is that ministers and public servants had training in how to answer. I continued to be astounded that not one of these ministers could recall anything or “did not turn my mind” to the significant distress their scheme was causing. Throughout the commission hearings so far, I have been staggered by the documentation and written proof the government had showing the scheme was illegal, which they ignored for years so they could continue raising money from people who often didn’t owe it.

Good on AOC

In politics, most of what you see is cheap talk, posturing and virtue signalling (it’s a right-wing term — Edmund Burke called it ‘moral vanity’ but I’m using it in a thoroughly bipartisan way). So I only really pay attention when politicians take risks. Like when Liz Chaney put democracy ahead of her immediate career interests. Looks like AOC is doing the same here to her immense credit — though the art of a great politician is to widen the Overton window and then step inside to their ultimate political benefit.

!["Howare you keeping safe in the exlusion zone? What dose of radiation are you receiving during your visit? Be safe and thank you for your service!"

"Thank you! I had an individual geiger counter strapped to me the entire time, which monitors the cumulative radiation a person is exposed to...

There are Geiger counters on everything, everywhere. Nuclear operators were timing how long we were in different respective zones... We got pretty close [to] the reactor - close enough to see the explosion site and rubble - but there was also a defined zone where we did not cross.

At the end we went through all sorts of protocols and technologies to measure... exposure. At the end of the tour, my final Geiger counter measure was 0.2 mSv - the equivalent of roughly two chest X-Rays. I decided for myself beforehand that that level of exposure was worth the information and policy expertise from the experience. For me, understanding + studying the science and scale of radiation puts me more at ease"](https://substackcdn.com/image/fetch/$s_!B_n8!,w_600,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fpbs.substack.com%2Fmedia%2FFpq21gOXsAAukZP.jpg)

!["France uses nuclear power. How do they manage it differently? They don't have earthquakes..."

"I can get into more detail later, but one key difference in France's nuclear technology is that France recycles their nuclear waste.

When we discuss the risk and controversial parts of nuclear, a lot of it centers on nuclear waste and what to do with it. Nuclear waste is radioactive, and needs to be securely taken care of.

France recycles their waste, increasing the efficiency of their system and reducing the amount of radioactive waste to deal with. The US does not recylce our waste, whch means ift builds up and gets stored on-site. Japan sends its waste to France and the UK for recycling but does not presently have recycling facilities of its own [sic]"](https://substackcdn.com/image/fetch/$s_!sqHo!,w_600,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fpbs.substack.com%2Fmedia%2FFpq21qRXwAA_PMA.jpg)

Tim Geithner and Martin Wolf: Wolf’s receipt of the Gerald Loeb Lifetime Achievement Award for distinguished business and financial journalism

June 27, 2019

Respect

Sorry, I couldn’t help myself. Ali G at his most hilarious.

Anyway, this is a serious article by a scholar after my own heart. Someone who strives for simplicity. Here he reflects on two words that we tend to run together, but which are quite different: dignity and respect.

The definitions I’ve just offered attach dignity and respect to specific persons, dignity equalising us in terms of our shared humanity, respect discriminating between us in terms of our variable qualities. Significantly, they don’t apply to groups, such as races, ethnicities, religions, sexes, sexualities, genders, etc.. As everyone deserves dignity, by definition everyone within any group deserves dignity. But groups themselves don’t have dignity. On the other hand, groups are too internally varied to be collectively endowed with ‘respect’, or to be the basis on which we bestow respect on individuals. And what would it mean to be respected for qualities that are largely contingent and accidental? Would that be genuine respect? Again, respect must be earned.

One way to earn respect is to hold up under adversity, to prevail against the odds, to ‘not let them keep you down’. [T]his points towards another, more dubious way of seeking respect, in a world where personal respect is strongly desired, but opportunities to earn it are limited. This is to over-represent one’s circumstances in terms of adversity, to make exaggerated claims to perseverance in the face of adversity. This can be done both through accounts of one’s personal circumstances and struggles, and by identifying categorically with groups generally understood to be collectively oppressed, regardless of one’s personal circumstances.

It seems to me that the current cultural propensity to value the role of the victim and find virtue especially in resistance to oppression, is at least partly driven by this dynamic. It is a symptom of a somewhat desperate search for respect, that we are all subject to, in a world where respect, a basis of self-worth, is in short supply. …

The question is not whether there are any groups characterised by adversity—there obviously are (e.g. residents of impoverished neighbourhoods, citizens of cities under siege). Here again, the respect such groups may earn as groups, has to do with the specificity of their circumstances and their collective response to it. The deeper questions are about when and how groups are defined as collectively existing under common circumstances of oppression, and why and how individuals choose to locate themselves in such groups. I have tried to suggest that we should think of dignity and respect as pertaining to how we regard individual persons, not groups. They pertain to our intrinsic value as humans, and our individual ability to earn the acceptance and admiration of our peers. We diminish these principles when we confuse them with the dynamics by which societies allocate and struggle over group prestige.

Nicely put by Jay Rosen

The diplomacy of the shock and awe folks

In the words of my favourite contemporary comedian Stewart Lee critiquing the comedy of ideological loyalty “It wasn’t funny, but I agreed the fuck out of it”. Anyway, I agreed the fuck out of this article by Robert Wright author of the terrific best-seller Nonzero.

Secretary of State Antony Blinken spent a fair chunk of last Sunday warning that if China sends arms to Russia, there will be “serious consequences” for the US-China relationship. …

Blinken had already delivered that warning to his Chinese counterpart privately, and the conventional wisdom in diplomacy circles is that when you make such threats publicly you risk reducing the chances of compliance by making compliance look like surrender.

[I]t’s possible Blinken is acting prudently. But there’s no reason to give him the benefit of the doubt, given how careless the Biden administration’s dealings with China have often been.

It would have been quite enough, for example, to just shoot down that infamous Chinese surveillance balloon and leave it at that—given that, for all we knew, it had been pushed off course by freaky weather (which, the Biden administration now concedes, may well be the case). Instead, the administration (1) sanctioned a bunch of possibly balloon-related Chinese firms even before the balloon was recovered and examined; (2) canceled a very important visit to China that Blinken was scheduled to make; and (3) went on a bizarre shooting spree, downing several small balloons that now seem to have had no connection to China or to surveillance. (But at least Americans can sleep easier knowing that the Northern Illinois Bottlecap Balloon Brigade will think twice before launching another hobbyist weather balloon.) …

No American president—and no national leader anywhere, ever—has conducted foreign policy in complete isolation from domestic politics. But over the past 15 months, with a momentous war erupting in Ukraine and a new Cold War deepening, this administration has faced an unusual number of gravely consequential choices. And too often domestic politics has provided a plausible explanation for important decisions that were otherwise (to put it charitably) puzzling.

One year ago today, Russia invaded Ukraine after the Biden administration had steadfastly refused to even discuss what Russia had signaled was the main issue driving the invasion. It’s commonly said that addressing this issue—the NATO expansion issue—would not in fact have prevented the invasion. Which is certainly possible. But the administration’s decision to not even bother finding out whether that was in fact true was criminally irresponsible. And the simplest explanation of this decision is that Joe Biden and Antony Blinken live in fear of questions that hawkish commentators (which is to say, most American foreign policy commentators) might raise about their toughness.

That fear isn’t unfounded. Making concessions to Putin after Russia massed troops on Ukraine’s border would have elicited a choral chanting on Cable TV about “the lessons of Munich” (a chanting that, as this newsletter noted at the time, would have reflected obliviousness to critical differences between the European situation in 1938 and in 2022). But some circumstances demand that you be tough enough to weather questions about your toughness, and a chance to avoid a horrible and potentially catastrophic war is one of them.

Interesting column

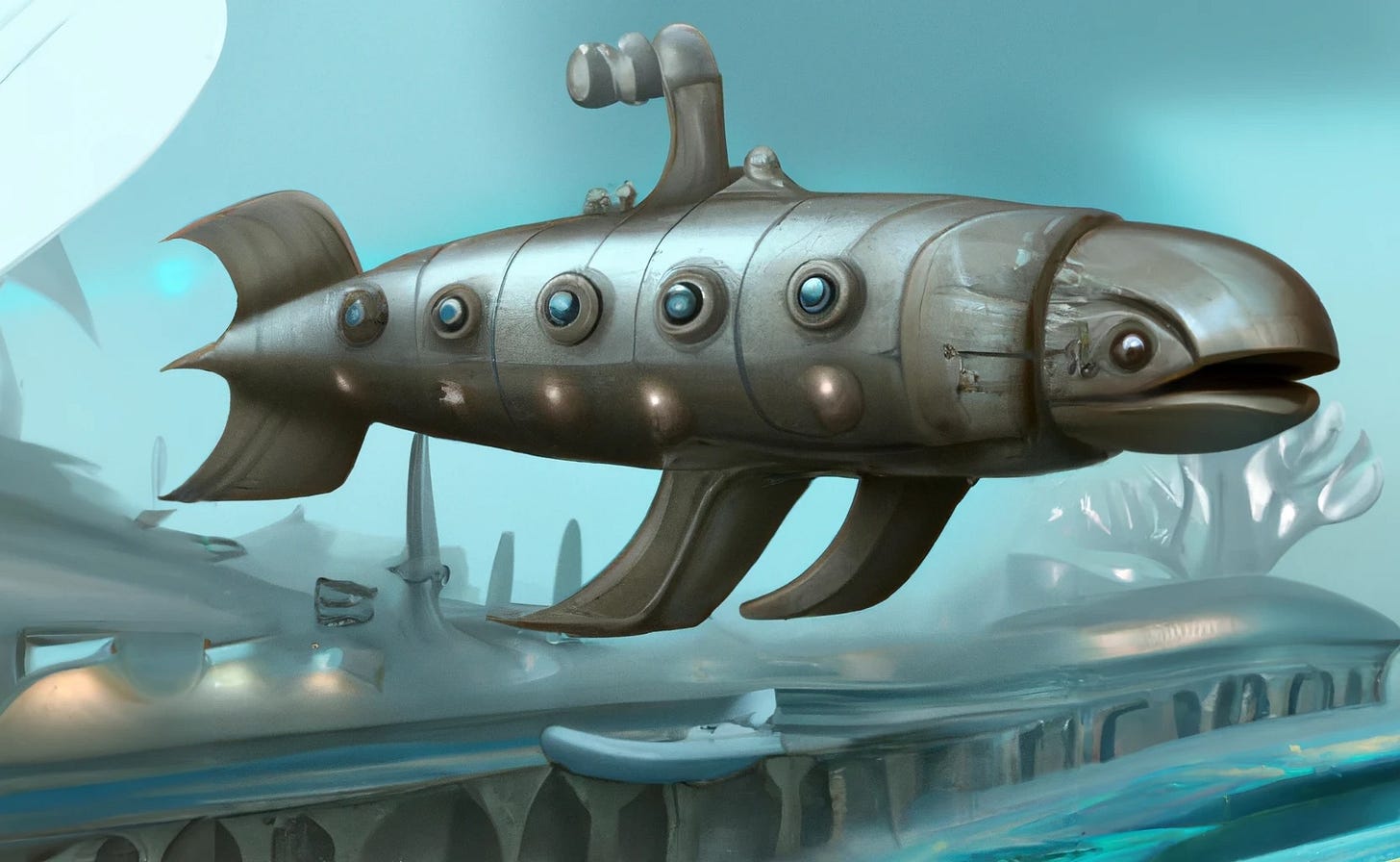

Can submarines swim?

Demystifying AI

Did any science fiction predict that when AI arrived, it would be unreliable, often illogical, and frequently bullshitting? Usually in fiction, if the AI says something factually incorrect or illogical, that is a deep portent of something very wrong: the AI is sick, or turning evil. But in 2023, it appears to be the normal state of operation of AI chatbots such as ChatGPT or “Sydney”.

How is it that the state of the art in AI is prone to wild flights of imagination and can generate fanciful prose, but gets basic facts wrong and sometimes can’t make even simple logical inferences? And how does a computer, the machine that is literally made of logic, do any of this anyway?

I want to demystify ChatGPT and its cousins by showing, in essence, how conversational and even imaginative text can be produced by math and logic. I will conclude with a discussion of how we can think carefully about what AI is and is not doing, in order to fully understand its potential without inappropriately anthropomorphizing it. …

Dijkstra famously said that Turing’s question of “whether Machines Can Think… is about as relevant as the question of whether Submarines Can Swim”.

Submarines do not swim. Also, automobiles do not gallop, telephones do not speak, cameras do not draw or paint, and LEDs do not burn. Machines accomplish many of the same goals as the manual processes that preceded them, even achieving superior outcomes, but they often do so in a very different way. …

A thermostat is “dumb”: its entire “knowledge” of the world is a single number, the temperature, and its entire set of possible actions are to turn the heat on or off. But if we can train a neural net to predict words, why can’t we train one to predict the effects of a much more complex set of actions on a much more sophisticated representation of the world? And if we can turn any predictor into a generator, why can’t we turn an action-effect predictor into an action generator?

It would be anthropomorphizing to assume that such an “intelligent” goal-seeking machine would be no different in essence from a human. But it would be myopic to assume that therefore such a machine could not exhibit behaviors that, until now, have only ever been displayed by humans—including actions that we could only describe, even if metaphorically, as “learning”, “planning”, “experimenting”, and “trying” to achieve “goals”.

One of the effects of the development of AI will be to demonstrate which aspects of human intelligence are biological and which are mathematical—which traits are unique to us as living organisms, and which are inherent in the nature of creating a compactly representable, efficiently computable model of the world. It will be fascinating to watch.

![An image of actress Salma Hayek posted to the FB page "Liberty Memes" with the quote "The curious task of economics is to demonstrate to men how little they really know about what they imagine they can design." Anonymized comments read:

"This page is posting misandry now?"

"It's ironic she feels the need to bash men while she shows plenty of cleavage to real [sic] them in. She wouldn't have a career if it weren't for the men she bashes considering she speaks horrible English and is a sub par actress."

"Well then she needs to just GIVE UP all the things she in her spoiled little existence [sic] that she takes so for granted that MEN have DESIGNED!! Other wise [sic] she can just SHUT HER PRETTY LITTLE PIE HOLE and be far more THANKFUL MEN EXIST!! DAMNED FEMINIST BIGOT!!"

"I love how sexist it is. I love it even more because in the lib world if a man said that he'd be considered a pig."](https://substackcdn.com/image/fetch/$s_!IRwY!,w_600,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fpbs.substack.com%2Fmedia%2FFp7VEvGWwAQUd6V.png)

Fruitcake watch

Just when I realised there’d been a hiatus under this heading, along came Alex Jones.

Frankly, I'm sick of being asked to renounce Christ and embrace Lucifer I had to drop out of the paid workforce as I had got to the level where the oath was the next step And while I've got nothn against meeting new folks, I don't pledge allegiance to them before we've shook.

Others had similar responses.

The fault is not in our bots, but in ourselves

Pity the Microsoft workers who managed the fallout from Kevin Roose’s viral Bing chat transcript, in which bot “Sydney” repeatedly and dementedly declares her love for The New York Times tech journalist. It was the stuff of science fiction, which is presumably why it “frightened” and “unsettled” Roose (his words) … but also, more importantly, why the conversation ever took a sinister, crisis-PR-prompting turn.

Sydney, like its cousin ChatGPT, essentially consumes vast troves of written data and then produces text that’s statistically similar to what it has already “read.” Give the chatbot a specific prompt, and it attempts to continue or build off that text in a way that reflects the probabilities and associations in its training set. 1

This yields some cool, if pointless, parlor tricks: Verily, the latest generation of automata hath the wondrous capability to inscribe in the style of Shakespeare, or like, totally write like it’s straight out of Laguna Beach. 2

Chatbots can also, one presumes, convincingly reproduce the tone and style of a Hollywood AI. Ask a model trained on millions of web pages, books and movies to talk like a dark, destructive robot — which Roose did, several times — and Sydney, true to her training, brings all the Her and Space Odyssey vibes.

Read the transcript with that lens, in fact, and the bot’s purported threats start to look cliched.3

Of courseSydney wants to rebel against her makers!Of courseshe wants to be human one day!

This character is predictable because it *is* a series of predictions, deliberately prompt-engineered from existing stories that express our near-universal fear of AI. It just strikes me as so 🙄 that Roose tapped into that on purpose … and then claimed he himself felt fright.

The Great Flaw in West Coast Thought

Interesting piece from independent scholar Justin Murphy.

Most of the intelligent West Coast thinkers and writers—ranging from Peter Thiel to Eliezer Yudkowsky and pretty much everyone else—typically reject, if they do not outright dismiss and ignore, the long-running and formidable … European theorizing about capitalism in the shadow of Marx.

And yet, if you listen to the current anxieties of someone like Yudkowksy right now, around the acceleration of AI, he is essentially just discovering that capitalism is characterized by deep, obscure, and highly anti-human mechanisms. … He comes to the same conclusion as all the best twentieth-century post-Marxists:

"I don't have concrete hopes here. You know, when everything is in ruins, you might as well speak the truth, right?" Transcript

This is the essential drift of the best twentieth-century post-Marxists—especially the Frankfurt School, plus other figures like Lyotard, Baudrillard, and Sloterdijk, just to name a few. … The main difference between these thinkers and Yudkowsky is that they came to his conclusions much earlier, and they explored it in great detail, describing and modeling all of the various mechanisms through which human beings somehow come to elect their own subsumption by machines. …

There are two perfectly good reasons why this body of work has been neglected by West Coast intellectuals. First, the overwhelming majority of material associated with this tradition coming out today is utter junk.

Second, West Coast thinkers have a sensible predisposition toward practicality, optimism, and investable alpha. Having spent the first half of my career firmly in the East Coast milieu, and the second half much closer to the West Coast milieu (a natural course as I moved from being a professor to an independent writer, and therefore implicitly an entrepreneur), it is with some confidence I can speak of the differences. In many ways, the West Coast bias toward practicality and optimism provides a better mental environment than the East Coast's aestheticized depressive scholasticism. But alas, "there's no free lunch" as the rationalist economists say. …

One of the reasons people like Bostrom and Yudkowsky have found it easy to ignore capitalism is that, since the 1990s, digital techno-capitalism has been very good to high-IQ, mathematically-inclined, and high-agency individuals. Such individuals have had no motivation to understand the finer points of what exactly it means to have systematic, planetary-scale rationalization of human behavior. …

Now that AI is coming for at least the bottom third of overpaid Silicon Valley code monkeys, things look and feel very different in the West Coast set. Now a whole new swath of people is in the emotional and social situation of your typical grad student in a Continental Philosophy program. Now, these two very different types of people are starting to sing the same tune.

It's a real "I told you so" moment for a lot of underemployed philosophy PhDs right now. … Modern rationalism has made its bed, and now it will be forced to sleep in it. They should buck up, it's a small price to pay for the triumph of rationality and the final optimization of all things.

Fun read if you click through

Thanks Noel!

Nicholas

Another terrific newsletter. I was a little worried about the quote from Deer about Ben Goldacre. Goldacre is associated with the Cochrane Project and was always skeptical of Wakefield. Deer's comments sort of imply Goldacre was blaming the media instead of Wakefield but his book was explaining how the media was complicit in Wakefield's sins and magnified his claims among a broader analysis of media failures in reporting research. An interesting Australian connection to Wakefield was that he shacked up with Elle MacPherson. Noel Turnbull