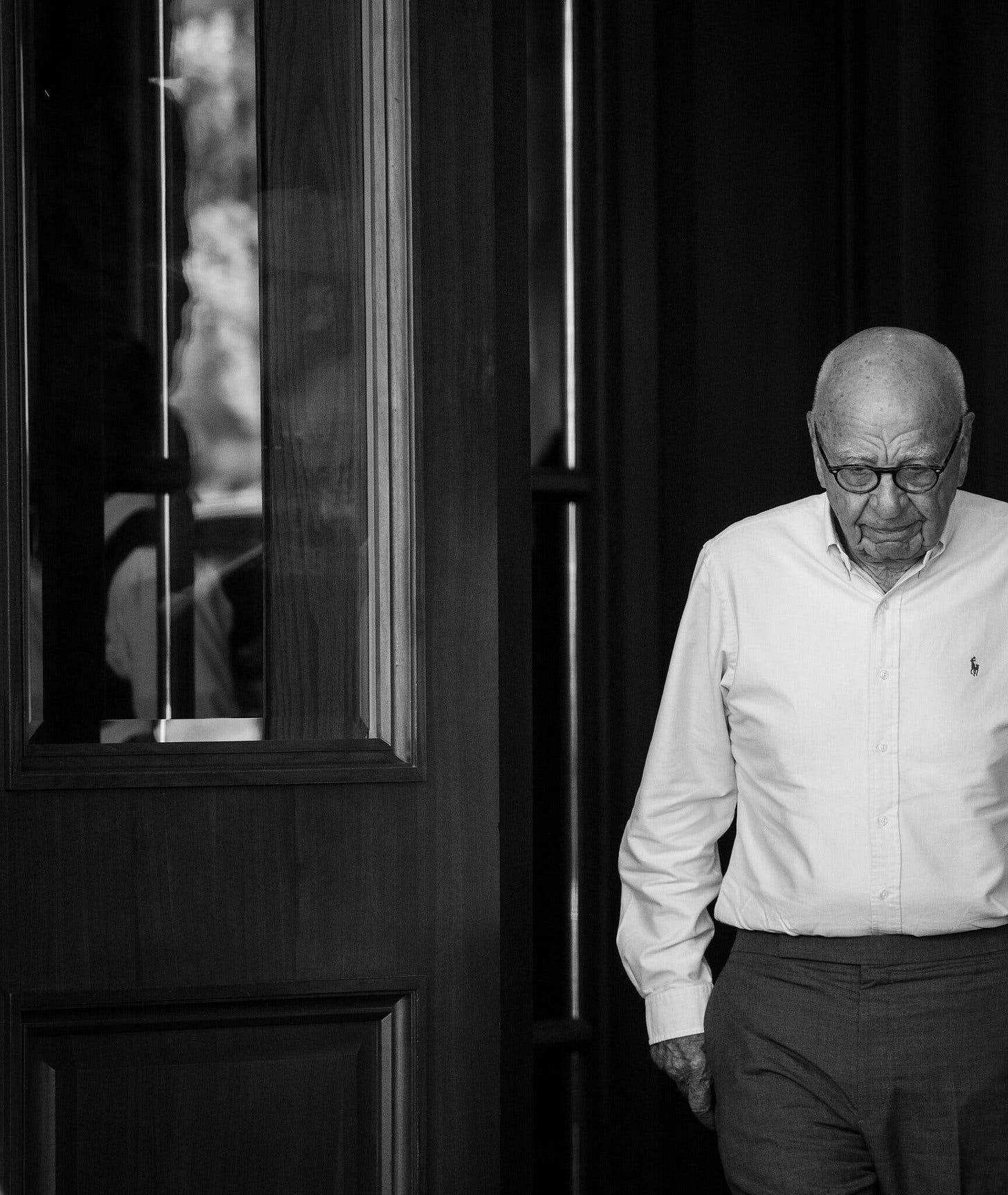

The Ludicrous Agony of Rupert Murdoch

My mother used to say “you dear old pet” if something was wrong. It was used without irony, but somehow transferred to her son who uses it to express faux sympathy. Rupert really is a dear old pet.

Writing this column must have been like shooting fish in a barrell, but I guess someone had to do it.

It’s nice to know that Fox News, which has so deranged America while making Rupert Murdoch ungodly sums of money, has in the end made Murdoch miserable, at least if the journalist Michael Wolff is to be believed. But the consolation is a small one.

Murdoch’s unhappiness and befuddlement is the throughline of Wolff’s amusingly vicious and very well-timed book, “The Fall: The End of Fox News and the Murdoch Dynasty,” which is to hit shelves next week, days after Murdoch, 92, announced his retirement from the Fox Corporation and News Corporation boards. Wolff paints Fox’s owner as embarrassed by the channel’s vulgarity and horrified by its ultimate political creation, Donald Trump. Murdoch apparently very much wants to thwart the ex-president, just not at the price of losing a single point in the ratings. …

Few people bear more responsibility for Trump than Murdoch. Fox News gave Trump a regular platform for his racist lies about Barack Obama’s birthplace. It immersed its audience in a febrile fantasy world in which all mainstream sources of information are suspect, a precondition for Trump’s rise.

As long as Murdoch is alive, the future of Fox is unwritten. Once he dies, his four oldest children will determine who controls it, and James may yet prevail. But Murdoch’s legacy is decided. We are hurtling toward another government shutdown, egged on by Hannity. The electorate that Fox helped shape, and the politicians it indulges, have made this country ungovernable. An unbound Trump may well become president again, bringing liberal democracy in America to a grotesque end. If so, it will be in large part Murdoch’s fault. “The Murdochs feel bad, about Tucker, about Trump, about themselves,” writes Wolff. Just not bad enough.

And speaking of ludicrous agonies. There’s plenty of it about. Be sure and check out “The Ludicrous agony of social media” below.

Can we use the F word now?

Fascism that is

Ziggy’s guide to getting to the top of PwC

A fabulous piece from the AFR. I didn’t know we still did commentary as well as this. But we do. Nice to see some holdouts against the zeitgeist. And where does that zeitgeist come from? The incentives dear boy, the incentives. The thing is, the people who produced this generated a public good — something, beautifully written, well observed and considered and pervaded by an ethical sensibility. And there’s no money in any of that. That's why we have institutions. Like PwC. To make sure things are done properly. Or maybe not …

(Drawn to my attention by a reader and friend.)

Anyone waiting for Ziggy Switkowski to outline the specific failures that led to PwC overlooking and profiting from its advance notice of the government’s multinational tax plans will be disappointed. Commissioned to do a “governance” review … the veteran director has delivered a portrait of Australia’s largest consultancy firm as a swamp. A foggy bog.

It’s all very interesting, and highly critical. But, overall, rather vague.

“There is a complex ‘tapestry’ of responsibility for conduct at PwC Australia,” Switkowski writes. What a line. No PwC partners failed their duty because the business’ processes render all as mere threads in an intricate weave. Look at Switkowski’s seeing-eye puzzle, stare up close for 30 seconds, then step back for the big reveal!

We needn’t have worried how Switkowski would feel writing about his former adviser and ex-PwC chief Luke Sayers. Switkowski hasn’t mentioned anyone by name at all. The Crown chairman and now-practised governance reviewer has instead neatly systematised the problem, removing it far above petty details such as the emails forwarded, briefings ignored and warnings unheeded. But that doesn’t make Switkowski’s investigation useless. If read as a guide to how to get ahead at the firm, it’s a veritable treasure map.

So, follow these simple steps, young buck, and you too can become implicated in a global tax-avoidance scheme one day. It won’t be easy, but just close your eyes and think of the harbourside mansion you’ll have to show for it.

At PwC, Switkowski’s investigations note, seniority is the intersection of tenure and popularity.

“To thrive as a partner in such a large and complex system, a strong internal network is critical, and sufficient energy is invested in cultivating this,” he writes.

It helps to start as a graduate. For outsiders, the PwC jungle is not easily navigated, described by “lateral hires” as “chaotic and out of control” until they twig that the only thing that really matters is who they know.

We do wonder at what stage in the investigation did Switkowski realise he could be describing the Cosa Nostra? We can softly hear the Ennio Morricone score already! …

“Winning the CEO election … relies on allegiances across the partnership,” the review states. Being a lifer helps, and in 2020 when Tom Seymour emerged triumphant, the final candidates all fit a similar mould, “having worked their way up the ranks”. Once there, these CEOs perpetuate the system. So, for the enterprising would-be partner, brown-nosing never hurts.

“The overwhelming perception is that a new CEO appoints close, trusted colleagues to key internal roles.” This replicates all the way down; key positions everywhere awarded on the basis of loyalty, with what Switkowski describes as little focus on “capability-based criteria”. Of course, outwardly, PwC’s promotions prioritise all sorts of diversity. Cognitively though, most are cut from the same cloth.

Climbing up the greasy pole in this world of skin-deep variety isn’t achieved by antagonising one’s colleagues. That’s a path to social and professional death. … A belief in the career-limiting impacts of dissenting views was reinforced to Switkowski “through stories told of people who had the courage to challenge but experienced negative consequences as a result”. One wonders if these individuals of “courage” within PwC’s walls view responsibility as an intricate “tapestry”? …

In Switkowski’s mind, if only PwC had better processes and governance, every Tom, Dick and Harry in an MJ Bale suit would not have committed the sins. But rotten cultures are not sprung into existence. They are created by rotten people making rotten choices. It’s the people, Ziggy. It always has been.

A new graphic genre

AI generated of course …

Dan's Big Build

I remember watching John Brumby proudly burnishing his credentials as Victoria’s low debt premier while Melbourne groaned for more infrastructure. It was bad economics. I argued it was also bad politics. The government should borrow to invest in infrastructure I would say, but the very serious people thought I was being naïve, very old fashioned. It was a great electoral strength for Labor to have low debt because it made them a small target. I’d buy some of that if Labor had been in Opposition where you barely get on the telly if you aren’t wearing a silly hat (and university tests prove that silly hats make it difficult if you’re trying to persuade people of something, or even just trying to pose as someone sensible — which is what politicians tried to do back then. (Silly them! We’ve learned that’s not necessary.).

Anyway, John Brumby was swept away and, after a brief Liberal hiatus, Dan returned and spent up on the Big Build. And lo as the people looked upon Dan’s intentions they looked in awe and thought the Big Build was more worthwhile than mud wrestling with trans activists (which was part of the Liberals’ schtick at the time). Surely it would have been nearly as politically popular to do all that building according to a rational plan. But, why be sensible, when you can be — well silly?

This piece is sometimes ponderously or confusingly written, but seems pretty on the money — in more ways than one.

The Andrews government has never adopted a formal, public, long-term infrastructure strategy. It has published documents … but these are retrofilled scrapbooks based around projects chosen with little consideration of how they all work together. …

The Melbourne Metro, the megaproject Andrews spruiked on his way out the door, is one of vanishingly few projects running ahead of schedule — and even that project has run billions over budget. Others have been bigger fiascos: the West Gate Tunnel, … has become a quagmire — ensnared in complicated disputes over contaminated soil, three full years behind schedule and in the neighbourhood of $4.5 billion over budget, or almost double the initial price tag.

The Melbourne Airport Rail, meanwhile, due to connect Tullamarine Airport to the metro rail system in 2029, has been put on pause, alongside the Geelong Fast Rail, pending the federal government’s infrastructure review findings. The Suburban Rail Loop’s total cost has been estimated by the Parliamentary Budget Office at double the original forecast — more than $100 billion for the full project, which isn’t due to be fully complete until the 2080s. These half-built, might-never-be-complete projects stand as monuments to the government’s naivety, its impatience, its imprudence. …

[T]he private sector is also deeply involved in planning, assessing and even suggesting major projects. … Some of the Big Build’s biggest projects have been devised from their earliest stages by the likes of PwC and Transurban. It is companies like this that are setting the priorities, developing ideas, planning routes, proposing programs — policymaking on a vast scale, with an eye-watering budget and long-term consequences for millions of people, devised by private, profit-driven, non-transparent and unaccountable businesses.

And thanks to a reader for sending in this parallel story of infrastructure in the UK.

Parking? There’s an app for that

Kidnapped by the Church

And why not? I noticed this film in the Italian Film Festival and read up on the story. Quite the story. And not everyone gets to be brought up by someone who cooked up both the immaculate conception and papal infallibility — Pius IX.

From Wikipedia: (Note, if you want to see the film you might want to stop after a couple of paragraphs if you think the rest might be a spoiler. Seemed fine to me though):

1851. Edgardo Mortara is the sixth child of a Jewish family from Bologna, a city at the time pertaining to the Papal States. Believing him sick and dying, the Christian maid Anna Morisi administers baptism in secret, for fear that when he dies he will end up in limbo. The child survives, but seven years later Anna tells Pier Feletti, head of the Bolognese office of the Holy Inquisition, about the baptism: the sacrament would have made the child irrevocably Catholic, and since the norms of the Papal States forbid a Christian to be raised by non-Christians, Feletti decides to take the child away from his family. On 24 June, Edgardo was then forcibly taken and brought to Rome, where he would stay in the Casa dei Catecumeni, the boarding school for the children of converted Jews.

Edgardo's parents, Momolo and Marianna, do everything to draw public attention to the case, causing the indignation of European and non-European intellectuals. However, this causes Pope Pius IX to take the matter to heart: the papacy is in fact in a moment of strong political crisis, in which the figure of the pope has lost prestige and authority; Pius IX then decides to stand up to all the accusations, personally taking care of Edgardo's education and having him administered a second baptism or reception (this distinction is important in the later trial) that dispels any doubts about his belonging to the Catholic Church. Edgardo is raised and educated in a completely Catholic environment.

Months after Edgardo's arrival in Rome, Momolo and Marianna obtain permission to visit him. Members of the Roman Jewish community coldly welcome the two, as they are afraid of losing the privileges granted by the pope due to the media outcry aroused by the affair. Momolo, therefore, decides to treat Edgardo with detachment, telling him only that he's happy to find him in good health; in front of Marianna, however, the child bursts into tears and reveals to his mother that he still secretly recites the Shema Yisrael every night. Those in charge of Edgardo's education therefore forbid any future visits, unless the whole family converts to Catholicism. The Mortaras refuse and organize an attempted kidnapping of the child, which however fails and causes the total loss of support from the Jews of Rome.

In 1860 Bologna was taken from the pope by the rioters; the courts of the Kingdom of Sardinia, of which the city now belongs, arrests Pier Feletti and put him on trial in the Mortara case. During the hearings, the whole story is reconstructed and it is discovered that the baptism administered by Anna Morisi is valid to all intents and purposes; Feletti is acquitted of the charges, as he acted in full compliance with the laws in force at the time of the events. Edgardo remains in Rome. The Pope is still sovereign there because of military assistance from France under Napoleon III. In 1870 when France was attacked by Prussia and the French troops are withdrawn, the Kingdom of Italy occupies Rome and the eleven-hundred year history of the Papal States comes to an end with Pope Pius IX losing his temporal power.

Meanwhile, Edgardo grows up in the care of the Pope. He studies for the priesthood and assumes the clerical name of Pio Maria. In 1870, with the breach of Porta Pia, Rome becomes part of the Kingdom of Italy. One of Edgardo's older brothers, Riccardo, who is a soldier in the occupying army, runs to look for him and tells him that he can finally return home. However, Edgardo refuses, stating that his real family is now the Catholic church. When Pius IX dies in 1878, Edgardo, in an impulsive act, joins the rioters who wish to throw his coffin into the Tiber. He regrets his action and runs away.

Years later, Marianna, Edgardo's mother, is dying and Edgardo, who years earlier had refused to go to his father's funeral, finally returns home. Taking advantage of a moment when he is alone with his mother, the young man tries to baptise her, but she refuses, declaring that she lives as a Jew and wants to die as a Jew. Edgardo is driven away by his siblings after this attempted conversion. This appears to be his family's final break with Edgardo. The film's closing credits state that Edgardo was ordained as a priest in the Canons Regular, worked throughout Europe as a missionary and preacher and that he died in a monastery in Belgium in 1940 at the age of 88.

A fun interview

Rory couldn’t believe the utter lack of seriousness in contemporary politics. But we’ve all seen it. And enough people vote for it to keep it going. One of the saddest things about our time.

Michael Polanyi on the good and the bad of Karl Marx and Adam Smith

From a fascinating 1948 essay titled “Forms of Atheism”.

About 1820 Fourier wrote that in the Phalanstere every child will easily master twenty different industrial arts—both physical and intellectual—by the age of four. From this crazy statement to those of our own time, announcing that science had solved the problem of abundance and that we had now to plan an Age of Plenty, we find an uninterrupted series of similar paranoid manifestations. We must now vigorously shake off this whole swarm of daydreams.

In view of recent historic experience, I should outline the scope of social improvement as follows. We are committed to a mode of production based on a large number of highly specialized industrial plants drawing on a great variety of resources and catering for myriads of different personal demands. This method could be discontinued only at the price of reducing the population of the West to a fraction of its present numbers and would make the remainder miserably poor and utterly defenceless. I do not feel that this is a possible line of policy.

Marx was right on the whole in saying that the utilization of a certain technique of production is possible only within the framework of certain institutions. He rightly recognized, with the followers of Adam Smith, the system of private enterprise operating for a market as the adequate embodiment of industrialism, as it existed then. He was wrong in assuming that this technique of production was in the course of being replaced by another which would require to be embodied in a centrally directed economic system. His forecast of progressive capitalist concentration was clever, but extravagant.

The followers of Adam Smith were wrong in letting their onslaught on pro- tectionism grow into a glorification of capitalism as a state of economic perfection. They were doubly wrong in opposing regulative economic legislation on principle, instead of welcoming it as an essential condition for the rational operation of capitalism. Marx was right in attacking the evils of unregulated capitalism and closer to the truth than his opponents among classical economists in exposing the deep- seated economic disharmonies manifested in recurrent mass unemployment. His manner of evaluating these observations, however, was again fantastic. His blind faith in progress made him conclude that since capitalism was faulty, it would necessarily be supplanted by a new set of institutions, which would eliminate these imperfections.

As Columbus inevitably identified the Antilles with India which he had set out to discover, Marx identified the new system of which he had thus thought to have proved the necessity with Socialism. This was the argument for which he claimed that it transformed Socialism from a Utopia into a science. The same manner of reasoning can be observed even today wherever the demand for Socialism is derived from an exposure of the shortcomings of Capitalism. It underlines the most advanced socialist theories which expose the general imperfections of capitalist competition and expect Socialism to restore the perfect competitive market.

Using AI to reduce educational trauma

(Oh — and improve achievement!)

Andy Haldane, who left a long career at the Bank of England to head up the Royal Society of the Arts was my favourite public servant anywhere and gave great speeches as the Bank’s Chief Economist. He’s now got a regular gig at the FT and here’s one of his columns:

In the UK, a terrifying one in five young people aged 17 to 24 were reported as having a probable mental health disorder in 2022. Of course, not all are rooted in education and examinations. But the correlation is strikingly high between the incidence of mental health problems and low educational attainment in young people. It can be seen, too, in surveys of wellbeing — as many as a quarter of young people leave education loathing, rather than loving, learning. It would be hard to think of a worse endowment.

This means those departing education with fewest skills are also least likely to engage in life-long learning. And it means the gaps in attainment in early years are likely to widen over time, becoming chasms in adult opportunity and income. Education can entrench inequality, rather than redress it. What could be done to close those chasms? A good start would be to rethink the metrics of success.

Currently, examination results and school league tables are paramount. But if the key arbiter of later-life success is learning attitudes and experiences, rather than outcomes per se, it would be better to target those directly by measuring pupil wellbeing. That’s not as idealistic as it sounds. In the UK, the #BeeWell initiative puts young peoples’ wellbeing at the heart of measuring achievement — and how well education is designed. After a pilot in Greater Manchester, this initiative is being rolled out to nearly 10 per cent of pupils across England this year.

To date, limits on resources have meant a personalised learning was not practical for most pupils. While learning is often adjusted in its pace and content to different needs, truly personalised learning — tailored in its aims and outcomes, and pedagogy — has remained out of reach. Until now.

This is because digital technologies, and artificial intelligence in particular, are freeing us from our 19th-century learning constraints. The booming global “edtech” sector, now worth well in excess of $100bn each year, is testament to that.

Estonia, often a digital first-mover, is developing infrastructure using AI to give teachers and students greater autonomy, enhancing pupils’ experience and sense of agency. Has this damaged education outcomes? To the contrary, Estonia is the highest-ranked country in Europe on the Programme for International Student Assessment (Pisa) scale.

Personalised medicine, attuned to our individual biologies, is fast becoming a practical reality. Regrettably, personalised education, attuned to our individual brains and circumstances, is less advanced. Implementing such a programme would be the most significant shift in education practice in a century. But the potential dividend, for individuals and economies, could be huge.

It could help rescue life-long learning from the realms of rhetoric.

Do masks work? Hint: of course they do

And of course you can use them ineffectively

Good piece in Scientific American:

Recently a Cochrane review, which systematically assesses multiple randomized controlled trials, provoked headlines after claiming a lack of evidence that masks prevent transmission of many respiratory viruses. Not for the public, health care workers, or anyone. “There is just no evidence that they make any difference,” the lead author said in a media interview. This brought an unusual chastisement from the Cochrane Library’s editor-in-chief, who stated it was “not an accurate representation of what the review found.” …

In the 1980s … Refining medical decision-making to make it more repeatable, consistent and linked to evidence marked the laudable birth of the evidence-based medicine movement. This effort included establishing a “hierarchy of evidence,” the idea that some types of evidence are more useful to medical decision-makers than others. Expert opinion and observational studies are at the bottom of the pyramid, randomized trials in the middle, and at the top, systematic reviews of these trials, where researchers compile and review several clinical trial results to make broader, more conclusive statements as happens with Cochrane review. …

In many scientific disciplines randomized trial methods are fundamentally inappropriate—akin to using a scalpel to mow a lawn. If something can be directly measured or accurately and precisely modeled, there is no need for complex, inefficient trials that put participants at risk. Engineering, perhaps the most “real-world” of disciplines, doesn’t conduct randomized trials. Its necessary knowledge is well-understood. Everything from highways to ventilation systems—everything that moves us, cleans our air and our water, and puts satellites into orbit—succeeds without needing them. This includes many medical devices. When failures like a plane crash or catastrophic bridge collapse do occur, they are recognized and systematically analyzed to ensure they don’t happen again. The contrast with the lack of attention paid to public health failures in this pandemic is stark.

“Does a mask protect me from aerosolized virus?” or “Does this seat belt keep me from flying through the window in an accident?” are different types of questions than “Does aspirin reduce death rates after a heart attack?” Imprisoning engineering and the natural sciences at the very bottom of an evidence hierarchy—at the same level as an expert opinion—is a mistake. As with seat belts, whether people use masks properly matters, but no randomized trial could conclude seat belts “don’t work.” At best, that type of trial would be a truly inefficient way to assess specific instructions and incentives to get people to use them properly.

Quite. Given this, as I’ve argued, RCTs should not be regarded as the ‘gold standard’ in public policy.

The Political Economy of Technology

Thanks to reader Robert Banks for referring me to this long and comprehensive review of Acemoglu and Johnson’s Power and Progress which takes an axe to the ‘trickle down’ theory of productivity from technological development. The book argues that the dividends of productivity growth tend to be captured by wealthy and powerful elites in the absence of countervailing power ensuring that workers and customers get to be part of the deal. A particularly compelling story from the book appears in the last section below.

The authors’ formula — that the elites always run away with the loot is a little simplistic — lots of gains are enjoyed outside the elites even when countervailing power isn’t so great if productivity growth goes on long enough — as happened in the second half of the 19th century. But it’s nice to get a corrective to the usual bromides which tend to counsel complacency.

Here’s the part of the review covering the authors’ policy suggestions for the future. The problem is that it’s hard to see much of this getting very far given the state of our media and politics. Alas the authors say little about this, though I think there are important fronts on which the battle can be fought.

Acemoglu and Johnson propose a varied, complementary set of pathways to constrain and shape the impact of the new technologies. They begin with the need for workers organizations adapted to today’s labor market, which is far more decentralized than the one in which mid-twentieth-century industrial unions thrived. One hope is that the same technologies that enable the algorithmic management of work in Walmart can be used by workers to construct a new kind of solidarity.

Acemoglu and Johnson point to successful, if limited, union drives in some Amazon and Starbucks locations. And though their book was written before the Teamsters negotiated a new contract with UPS that could have substantial spillover effects on unorganized FedEx drivers, it offers ample evidence that seemingly isolated events often have systemic importance. To be sure, they recognize that identifying the actual distributional effects of a technology’s deployment is extremely challenging, and that formulating interventions to move deployment toward machine-useful applications is even more so. After all, the unintended consequences of interventions in this domain can hardly be known in advance.

Nonetheless, Acemoglu and Johnson point out that observable shifts in labor’s share of value added can be reliably measured at the firm, industry, and national levels, offering a barometer of technology’s impact. In this regard, they recommend “a plurality” of experiments in how technology is used, with rewards on offer for the most promising applications. But they reject pre-emptive taxes on certain uses, given the climate of uncertainty in which policy must be made.

Their approach thus aligns with the strategy of President Joe Biden’s administration for directing the US economy toward green technologies; it is based almost entirely on carrots, rather than sticks. One area where Acemoglu and Johnson’s proposals are especially cleared-eyed and incisive is antitrust regulation. They would supplement antitrust enforcement with a variety of complementary initiatives, including a repeal of Section 230 – the notorious law that shields Big Tech companies from any liability for what users post on their platforms – and new taxes on digital advertising. In a similarly progressive vein, they call for reforms to rebalance the incidence of federal taxation, which very substantially favors returns on capital over wages from labor.

After taking account of Social Security taxes, they note that an incremental $100,000 spent by a firm on hiring labor incurs a 25% tax bill jointly for the employer and employee, whereas taxes on new equipment for the same tasks amount to less than 5%. To shift the balance of labor-market interventions, they favor subsidies for worker training both in the firm and outside. But they steadfastly reject appeals for a universal basic income (“UBI”), which they see as a profoundly wrong-headed concession to some techno-visionaries’ dream of automating human work out of existence. In addition to depriving people of a sense of earned reward for effort, a UBI, they might have added, would also redirect society’s resources away from the provision of public goods like roads and libraries. Indeed, it represents another expression of the “methodological individualism” that animated British Prime Minister Margaret Thatcher’s famous assertion that “there is no such thing [as society].”

The ludicrous agony of Facebook (and YouTube)

I’m currently reading Daron Acemoglu and Simon Johnson’s much hyped latest tome Power and Progress. It’s a good romp through the history of technology from a social democratic perspective. I know Acemoglu is one of the best thought of economists, but I’ve never found his writing very insightful. I still don’t. But if the book doesn’t give us many clues as to what steps might enable us to save ourselves from the onslaught of profit maximising destruction of our culture (other than to stop doing the kinds of things it documents), it is nevertheless good as a Cook’s tour of our current predicament, and a bunch of interesting facts about the past.

If what is disclosed below were going on in factories, people would be in jail for industrial manslaughter. Anyway, you can’t make an omelette (at least an obscenely profitable one) without breaking a large number of things (including thousands of innocent deaths obviously). And it’s not all bad either. If you’re a politician or sometimes even an ex-politician like Nick Clegg, Facebook will probably be pretty accommodating. You just have to help people see things the way they should be seen.

Social Media and Paper Clips

Internet censorship and even high-tech spyware may say nothing about the potential of social media as a tool to improve political discourse and coordinate opposition to the worst regimes in the world. That several dictatorships have used new technologies to repress their populations should not surprise anybody. That the United States has done the same is also understandable when you think about its security services’ long tradition of lawless behavior that has only been amplified with the “War on Terror.” Perhaps the solution is to double down on social media and allow more connectivity and unencumbered messaging to shine a brighter light on abuses. Alas, AI-powered social media’s current path appears almost as pernicious for democracy and human rights as top-down internet censorship.

The paper-clip parable is a favorite tool of computer scientists and philosophers for emphasizing the dangers that superintelligent AI will pose if its objectives are not perfectly aligned with those of humanity. The thought experiment presupposes an unstoppably powerful intelligent machine that gets instructions to produce more paper clips and then uses its considerable capabilities to excel in meeting this objective by coming up with new methods to transform the entire world into paper clips. When it comes to the effects of AI on politics, it may be turning our institutions into paper clips, not thanks to its superior capabilities but because of its mediocrity.

By 2017, Facebook was so popular in Myanmar that it came to be identified with the internet itself. The twenty-two million users, out of a population of fifty-three million, were fertile ground for misinformation and hate speech. One of the most ethnically diverse countries in the world, Myanmar is home to 135 officially recognized distinct ethnicities. Its military, which has ruled the country with an iron fist since 1962, with a brief period of parliamentary democracy under military tutelage between 2015 and 2020, has often stoked ethnic hatred among the majority-Buddhist population. No other group has been as often targeted as the Rohingya Muslims, whom the government propaganda portrays as foreigners, even though they have lived there for centuries. Hate speech against the Rohingya has been commonplace in government-controlled media.

Facebook arrived into this combustible mix of ethnic tension and incendiary propaganda in 2010. From then on it expanded rapidly. Consistent with Silicon Valley’s belief in the superiority of algorithms to humans and despite its huge user base, Facebook employed only one person who monitored Myanmar and spoke Burmese but not most of the other hundred or so languages used in the country.

In Myanmar, hate speech and incitement were rife on Facebook from the beginning. In June 2012 a senior official close to the country’s president, Thein Sein, posted this on his Facebook page:

It is heard that Rohingya Terrorists of the so-called Rohingya Solidarity Organization are crossing the border and getting into the country with the weapons. That is Rohingyas from other countries are coming into the country. Since our Military has got the news in advance, we will eradicate them until the end! I believe we are already doing it.

The post continued: “We don’t want to hear any humanitarian issues or human rights from others.” The post was not only whipping up hatred against the Muslim minority but also amplifying the false narrative that Rohingya were coming into the country from the outside.

In 2013 the Buddhist monk Ashin Wirathu, dubbed as the face of Buddhist terror by Time magazine that same year, was posting Facebook messages calling the Rohingya invading foreigners, murderers, and a danger to the country. He would eventually say, “I accept the term extremist with pride.”

Calls from activists and international organizations to Facebook to clamp down on misleading information and incendiary posts kept growing. A Facebook executive admitted, “We agree that we can and should do more.” Yet by August 2017, whatever Facebook was doing was far from enough to monitor hate speech. The platform had become the chief medium for organizing what the United States would eventually call a genocide.

The popularity of hate speech on Facebook in Myanmar should not have been a surprise. Facebook’s business model was based on maximizing user engagement, and any messages that garnered strong emotions, including of course hate speech and provocative misinformation, were favored by the platform’s algorithms because they triggered intense engagement from thousands, sometimes hundreds of thousands, of users.

Human rights groups and activists brought up these concerns about mounting hate speech and the resulting atrocities to Facebook’s leadership as early as 2014, with little success. The problem was at first ignored and activists were stonewalled, while the amount of false, incendiary information against the Rohingya continued to balloon. So did evidence that hate crimes, including murders of the Muslim minority, were being organized on the platform. Although the company was reluctant to do much on the hate-crime problem, this was not because it did not care about Myanmar. When the country’s government closed down Facebook, its executives immediately jumped into action, fearing that the shutdown might drive away some of its twenty-two million users in the country.

Facebook also accommodated the government’s demands in 2019 to label four ethnic organizations as “dangerous” and ban them from the platform. These websites, though associated with ethnic separatist groups, such as the Arakan Army, the Kachin Independence Army, and the Myanmar National Democratic Alliance Army, were some of the main repositories of photos and other proofs of murders and other atrocities by the army and extremist Buddhist monks.

When Facebook finally responded to earlier pressure, its solution was to create “stickers” to identify potential hate speech. The stickers would allow users to post messages that included harmful or questionable content but would warn them, “Think before you share” or “Don’t be the cause of violence.” However, it turned out that just like a dumb version of the paper clip–obsessed AI program, Facebook’s algorithm was so intent on maximizing engagement that it registered harmful posts as being more popular because people were engaging with the content to flag it as harmful. The algorithm then recommended this content more widely in Myanmar, further exacerbating the spread of hate speech.

Myanmar’s lessons do not seem to have been well learned by Facebook. In 2018 similar dynamics started being played out in Sri Lanka, with posts on Facebook inciting violence against Muslims. Human rights groups reported the hate speech, but to no avail. In the assessment of a researcher and activist, “There’s incitements to violence against entire communities and Facebook says it doesn’t violate community standards.”

Two years later, in 2020, it was India’s turn. Facebook executives overrode calls from their employees and refused to remove Indian politician T. Raja Singh, who was calling for Rohingya Muslim immigrants to be shot and encouraging the destruction of mosques. Many were indeed destroyed in anti-Muslim riots in Delhi that year, which also killed more than fifty people.

Misinformation Machine

The problems of hate speech and misinformation in Myanmar are paralleled by how Facebook has been used in the United States, and for the same reason: hate speech, extremism, and misinformation generate strong emotions and increase engagement and time spent on the platform. This enables Facebook to sell more individualized digital ads.

During the US presidential election of 2016, there was a remarkable increase in the number of posts with either misleading information or demonstrably false content. Nevertheless, by 2020, 14 percent of Americans viewed social media as their primary source of news, and 70 percent reported receiving at least some of their news from Facebook and other social media outlets.

These stories were not just a sideshow. A study of misinformation on the platform concluded that “falsehood diffused significantly farther, faster, deeper, and more broadly than the truth in all categories of information.” Many of the blatantly misleading posts went viral because they kept being shared. But it was not just users propagating falsehoods. Facebook’s algorithms were elevating these sensational articles ahead of both less politically relevant posts and information from trusted media sources.

During the 2016 presidential election, Facebook was a major conduit for misinformation, especially for right-leaning users. Trump supporters often reached sites propagating misinformation from Facebook. There was less traffic going from social media to traditional media. Worse, recent research documents that people tend to believe posts with misinformation because they are bad at remembering where they saw a piece of news. This may be particularly significant because users often receive unreliable and sometimes downright false information from their like-minded friends and acquaintances. They are also unlikely to be exposed to contrarian voices in these echo chamber–like environments.

Echo chambers may be an inevitable by-product of social media. But it has been known for more than a decade that they are exacerbated by platform algorithms. Eli Pariser, internet activist and executive director of MoveOn.org, reported in a TED talk in 2010 that although he followed many liberal and conservative news sites, after a while he noticed he was directed more and more to liberal sites because the algorithm had noticed he was a little more likely to click on them. He coined the term filter bubble to describe how algorithm filters were creating an artificial space in which people heard only voices that were already aligned with their political views.

Filter bubbles have pernicious effects. Facebook’s algorithm is more likely to show right-wing content to users who have a right-leaning ideology, and vice versa for left-wingers. Researchers have documented that the resulting filter bubbles exacerbate the spread of misinformation on the social media site because people are influenced by the news items they see. These filter-bubble effects go beyond social media. Recent research that incentivized some regular Fox News users to watch CNN found that exposure to CNN content had a moderating effect on their beliefs and political attitudes across a range of issues. The main reason for this effect appears to be that Fox News was giving a slanted presentation of some facts and concealing others, pushing users in a more right-wing direction. There is growing evidence that these effects are even stronger on social media.

Although there were hearings and media reaction to Facebook’s role in the 2016 election, not much had changed by 2020. Misinformation multiplied on the platform, some of it propagated by President Donald Trump, who frequently claimed that mail-in ballots were fraudulent and that noncitizen immigrants were voting in droves. He repeatedly used social media to call a stop to the vote count.

In the run-up to the election, Facebook was also mired in controversy because of a doctored video of House Speaker Nancy Pelosi, giving the impression that she was drunk or ill, slurring her words and sounding unwell in general. The fake video was promoted by Trump allies, including Rudy Giuliani, and the hashtag #DrunkNancy began to trend. It soon went viral and attracted more than two million views. Crazy conspiracy theories, such as those that came from QAnon, circulated uninterrupted in the platform’s filter bubbles as well. Documents provided to the US Congress and the Securities and Exchange Commission by former Facebook employee Frances Haugen reveal that Facebook executives were often informed of these developments.

As Facebook came under increasing pressure, its vice president of global affairs and communications, former British deputy prime minister Nick Clegg, defended the company’s policies, stating that a social media platform should be viewed as a tennis court: “Our job is to make sure the court is ready—the surface is flat, the lines painted, the net at the correct height. But we don’t pick up a racket and start playing. How the players play the game is up to them, not us.”

In the week following the election, Facebook introduced an emergency measure, altering its algorithms to stop the spread of right-wing conspiracy theories claiming that the election was in reality won by Trump but stolen because of illegal votes and irregularities at ballot boxes. By the end of December, however, Facebook’s algorithm was back to its usual self, and the “tennis court” was open for a rematch of the 2016 fiasco.

Several extremist right-wing groups as well as Donald Trump continued to propagate falsehoods, and we now know that the January 6, 2021, insurrection was partly organized using Facebook and other social media sites. For example, members of the far-right militia group Oath Keepers used Facebook to discuss how and where they would meet, and several other extremist groups live-messaged each other over the platform on January 6. One of the Oath Keepers’ leaders, Thomas Caldwell, is alleged to have posted updates as he entered the Capitol and to have received information over the platform on how to navigate the building as well as to incite violence toward lawmakers and police.

Misinformation and hate speech are not confined to Facebook. Around 2016, YouTube emerged as one of the most potent recruitment grounds for the Far Right. In 2019, Caleb Cain, a twenty-six-year-old college dropout, made a video about YouTube explaining how he had been radicalized on the platform. As he said, “I fell down the alt-right rabbit hole.” Cain explained how he “kept falling deeper and deeper into this” as he watched more and more radical content recommended by YouTube’s algorithms.

Journalist Robert Evans studied how scores of ordinary people around the country were recruited by these groups, and he concluded that the groups themselves mentioned YouTube most often on their website: “15 out of 75 fascist activists we studied credited YouTube videos with their red-pilling.” (“Red-pilling” refers to the lingo that these groups used, with reference to the movie The Matrix: accepting the truths propagated by these far-right groups was the equivalent of taking the red pill in the movie.)

YouTube’s algorithmic choices and intent to boost watch time on the platform were critical for these outcomes. To increase watch time, in 2012 the company modified its algorithm to give more weight to the time that users spend watching rather than just clicking on content. This algorithmic tweak started favoring videos that people became glued to, including some of the more incendiary extremist content, the sort that Cain became hooked on.

In 2015 YouTube engaged a research team from its parent company’s AI division, Google Brain, to improve the platform’s algorithm. New algorithms then led to more pathways for users to become radicalized—while, of course, spending more time on the platform. One of Google Brain’s researchers, Minmin Chen, boasted in an AI conference that the new algorithm was successfully altering user behavior: “We can really lead the users towards a different state, versus recommending content that is familiar.” This was ideal for fringe groups trying to radicalize people. It meant that users watching a video on 9/11 would be quickly recommended content on 9/11 conspiracies. With about 70 percent of all videos watched on the platform coming from algorithm recommendations, this meant plenty of room for misinformation and manipulation to pull users into the rabbit hole.

Twitter was no different. As the favorite communication medium of former president Trump, it became an important tool for communication between right-wingers (and separately among left-wingers as well). Trump’s anti-Muslim tweets were widely disseminated and subsequently caused not just more anti-Muslim and xenophobic posts on the platform but also actual hate crimes against Muslims, especially in states where the president had more followers.

Some of the worst language and consistent hate speech were propagated on other platforms, such as 4chan, 8chan, and Reddit, including its various sub-Reddits such as The_Donald (where conspiracy theories and misinformation related to Donald Trump originate and circulate), Physical_Removal (advocating the elimination of liberals), and several others with explicitly racist names that we prefer not to print here. In 2015 the Southern Poverty Law Center named Reddit as the platform hosting “the most violent racist” content on the internet.

Was it unavoidable that social media should have become such a cesspool? Or was it some of the decisions that leading tech companies made that brought us to this sorry state? The truth is much closer to the latter, and in fact also answers the question posed in Chapter 9: Why has AI become so popular even if it is not massively increasing productivity and outperforming humans?

The answer—and the reason for the specific path that digital technologies took—is the revenues that companies that collect vast amounts of data can generate using individually targeted digital advertising. But digital ads are only as good as the attention that people pay to them, so this business model meant that platforms strove to increase user engagement with online content. The most effective way of doing this turned out to be cultivating strong emotions such as outrage or indignation.

Also RE: Catholicism & George Pell and why true Catholics do bad things, well, because it is an order. He joined one after all. That's why they are called orders. https://whyweshould.substack.com/p/to-build-a-better-world-we-should

The pagan as country dweller is not the whole story. Apparently Roman legionaries used the term like the way we use civilians to refer to, well, civilians. So part of the story is that if you are a member of a Christian army, then the non-members are civilians or, thus, pagans. So it was in use before Christianity, and in a similar way to refer to the not-us. The fact that cities have population closer to centres of power and are more sensitive to control by the state may have led to a folksonomy (but not an untrue one). I haven't confirmed this but I read last week in Jones, Prudence, and Nigel Pennick. A History of Pagan Europe. New York: Barnes & Noble, 1999.